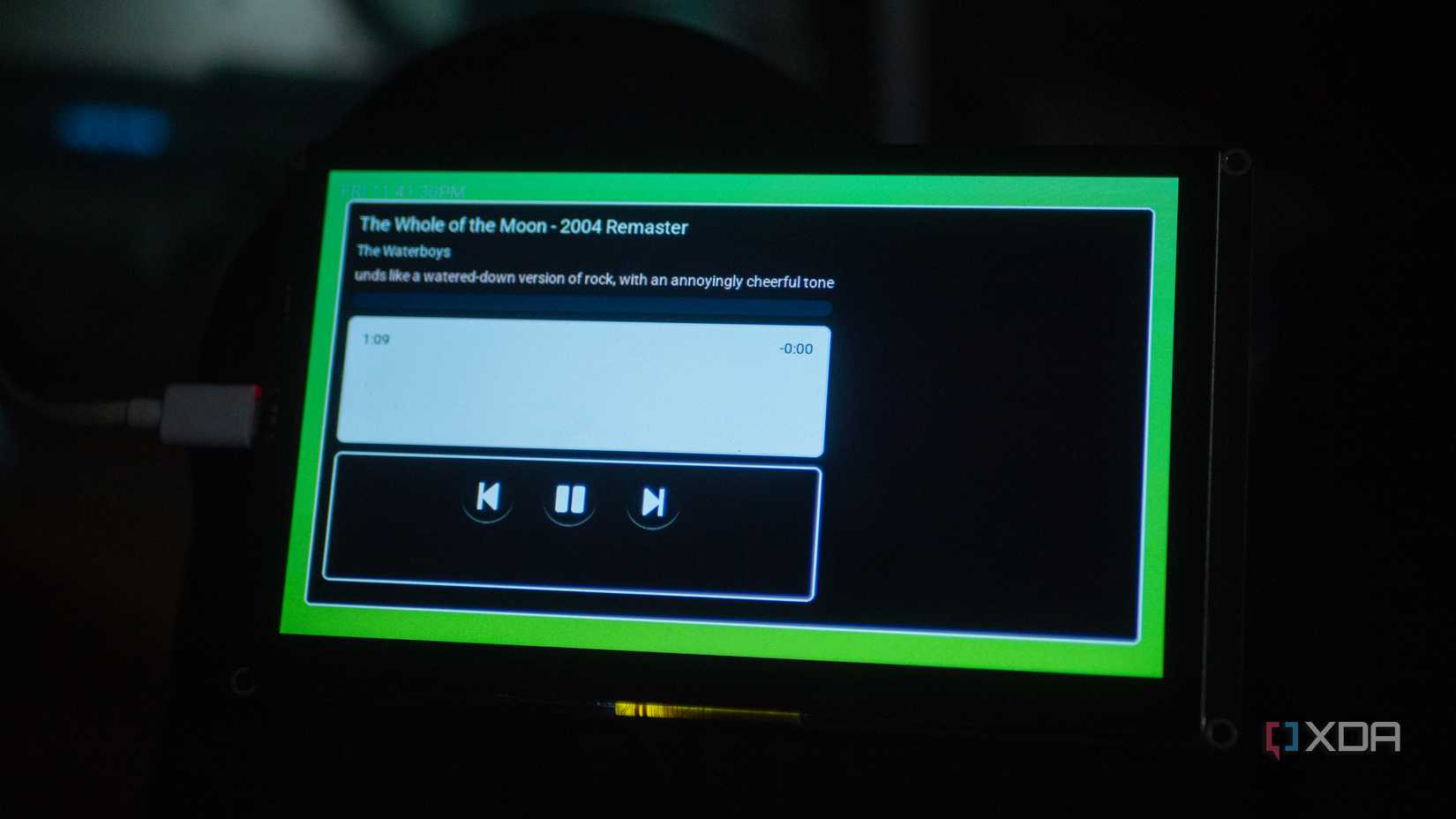

I’ve been playing around with ESP32 devices for a long time now, increasing both the complexity of the projects and, at times, the weirdness of them. That’s why I built a Spotify “now playing” display that allows me to control my music, shows what’s playing, and inserts a little dumb quip below it as well.

While the idea of inserting a quip below it as an insult may come across as silly or needless, that’s also kind of the point. It’s fun even if it’s slightly cringey as well, and it also demonstrates how you would use locally generated text for your own uses on a display like this, in a way that might even be useful rather than for the fun of it.

I’m using the Elecrow 7-inch ESP32 AI display for this, but any ESP32-S3-based panel will do the job, so long as it has enough PSRAM for the album art. All of the code is written for ESPHome, and it pulls the data from Home Assistant’s Spotify integration.

I actually really like how it looks

Even if it took a while to get right

Quips aside, I’m actually a big fan of how this design turned out. I had played around with a basic Spotify controller on a significantly smaller display than this before, so I could repurpose some of my code and introduce new features and tweaks that I’ve learned since I started working with LVGL and ESPHome. That included a background with a Spotify-green (ish) gradient and properly laying out everything on the screen.

The part that I’m most proud of, though, is the album art. This particular Elecrow panel is powered by an ESP32-S3 N16R8, meaning it has 16MB of flash and 8MB of PSRAM. That PSRAM is especially important, as previous roadblocks I had faced with projects like these were related to the fact that it was hard to find a block of contiguous RAM that could hold an image, especially a high-resolution image that the Home Assistant API serves up for a currently-playing track.

In fact, my previous escapades involved writing a small Python helper script that would scrape that URL and resize it for displaying it on one of these, but the ESP32-S3 is more than capable of handling it. It downloads the image (along with all of the other data) and resizes it before showing it on the screen, and I’ve not faced any difficulties with that solution here. I collect all of my Spotify details and use a template to pass them to a new entity, just so I can easily reference them on the device and have finer control over the sensors and entities ingested.

The image is served up by the Home Assistant Spotify integration in the form of a URL like “/api/media_player_proxy…” so I can simply pull that URL, and then on the device, return a new image to download by attaching the address of my Home Assistant instance before the newly pulled URL. It looks like this:

return std::string("http://192.168.1.70:8123") + id(address).state;Where “address” is the “/api/media_player_proxy” part that I mentioned earlier. When this value is changed, the image downloader component that I use knows to download the new image and display it, and it also fetches the new track title, new artist, and newly generated text from my local LLM.

I went through many iterations of the UI before settling on how it looks. I even tried getting a progress bar working, which I eventually gave up on. Not only did it not really work, but it didn’t look great, and it’s not “live” either. Without interpolation, it would only update every few seconds, so I scrapped it, reworked the UI, and I like it a lot more now. Here’s an old version of how it looked, before I got album art working too:

I like it a lot more now, as I’ve removed the borders, removed the sorry attempt at an indicator bar, and actually got album art working. It used to span the full width of the display as well, but the gap to the right was for the album art. I eventually did get it working in this configuration, but really didn’t like how it looked… So, here we are.

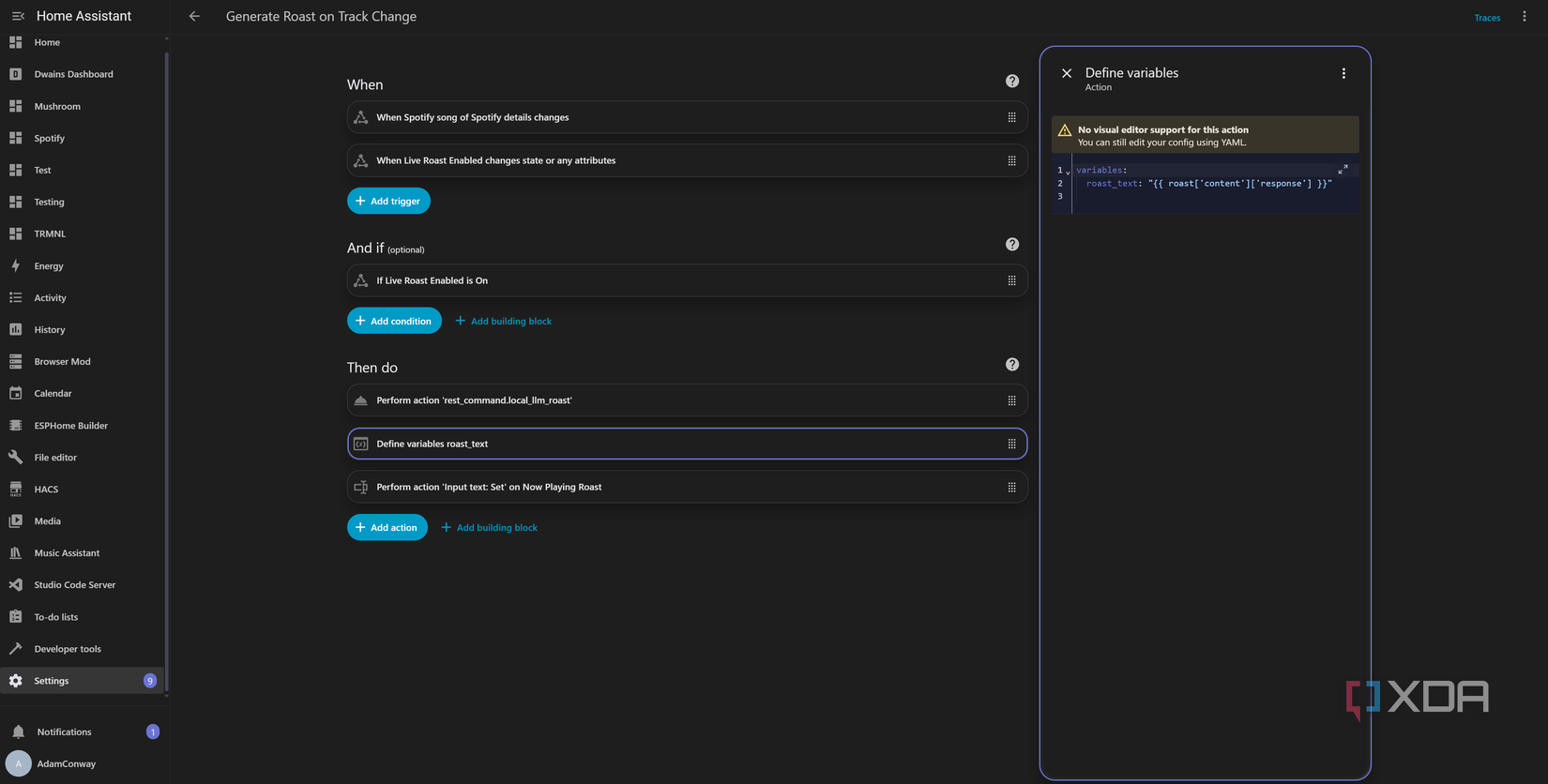

The insulting quips are done primarily via Home Assistant and Ollama, and we’ll break that down next.

Using a local LLM for text generation

Quick and easy

The local LLM was actually fairly easy, comparatively. I’ve done enough with them now to get it up and running quickly, and it was mostly just a copy and paste of my previous uses to pass the right parameters for generation. The difference is, I added an input_boolean to control when text is generated, so if it’s off, then it won’t generate them. When it’s off, the text also isn’t set on the display either.

The rest_command I added to my Home Assistant configuration is the following, though with proper indentation:

local_llm_roast:

url: "http://(ollama):11434/api/generate"

method: post

content_type: "application/json"

payload: >-

{{ {

"model":"dolphin-llama3",

"stream": false,

"prompt":

"You are a sarcastic but funny micro-critic.\n"

"Write a single, insulting, one-sentence critique of the currently playing track. Use your knowledge for the insult if you have any. Keep it short and concise; a few words at most.\n"

"Title: \"" ~ (state_attr('sensor.spotify_details','spotify_song') or '') ~ "\"\n"

"Artist: \"" ~ (state_attr('sensor.spotify_details','spotify_artist') or '') ~ "\"\n"

"Rules: Avoid repeating metadata."

} | tojson }}

timeout: 15

This then saves the response to an input text helper for displaying on the screen. Longer text will scroll on the display automatically, so you don’t need to worry about fitting it. The only limitation is the length of the input helper, which is limited to 255 characters.

I’m using a basic eight-billion-parameter model for this, Dolphin-Llama 3, and the generations are near-instantaneous. It’s small, quick, and perfect for a one-sentence response without even needing a large context window. For some tools, like my email triage assistant, a larger context window is required, but the default Ollama context size here (which is used when none is specified) gets the job done.

You can set this up, too

And learn a lot about LVGL

If you want to set this up, I’ve put all of the code in my Home Assistant GitHub repository. It works pretty well out of the box, and you can tweak it and change it for your hardware depending on what you use. So long as you have enough PSRAM, the album art will work. If album art doesn’t work, check your device logs, as chances are the lack of RAM is the problem.

Projects like these are a lot of fun; not necessarily because they’re useful, but because they can teach you a lot. LVGL’s layouts are still something that I find difficult at times, but through this project and trial and error, I feel like I finally have a grasp on how to do it the right way. What I learned making something silly can actually help me build something useful, but the silly thing was silly enough to keep me entertained and focused on it throughout.

As a Spotify media controller, it works great, and it’s nice to have a dedicated display that shows me what’s playing at any given time. And the little insult below it is fun, too. Not because it’s particularly “funny”, but it’s just entertaining to see the next annoying bit of text associated with the music I’m listening to at any given moment.

دیدگاهتان را بنویسید